A growing interest in organizational safety is driving the development of novel innovative fire evacuation training solutions. Past attempts have often utilized immersive virtual reality (VR) systems to enhance trainees’ understanding by allowing them to safely navigate hazardous scenarios. The predominantly audiovisual nature of such simulations has however attracted criticism for limited validity. Instead, it has been suggested future VR simulations should incorporate multi-sensory interfaces for a more realistic replication of real-world situations.

Objectives

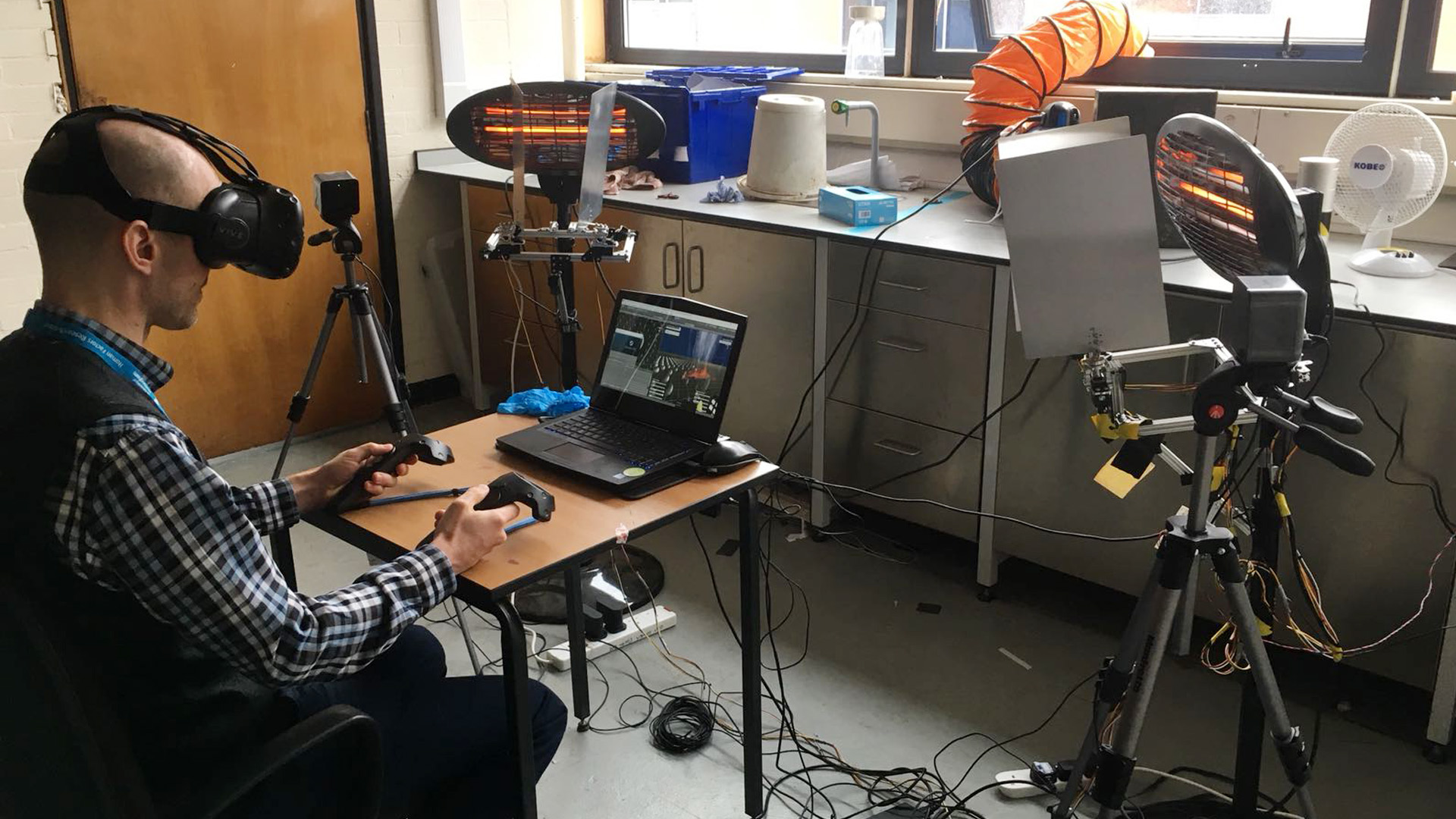

The British Institute for Occupational Safety tasked the the Human Factors research group at the University of Nottingham with the development of a novel prototypical fire evacuation simulator capable of delivering not just audiovisual feedback, but likewise real-time heat and scent stimulation.

What I did

My responsibility was to produce an interactive virtual office environment. This entailed 3D modelling, texturing and scripting of a range of in-game events, such as a dynamically spreading fire. I also produced a video overview (see below) for the purpose of a CHI conference installation. The project was supervised by Professors Glyn Lawson and Sue Cobb. Daniel Miller was responsible for the hardware and its integration with the rest of the system. Emily Shaw and Tessa Roper carried out the user studies and a subsequent quantitative analysis. Tony Glover produced a data visualization application for the study.

Workflow

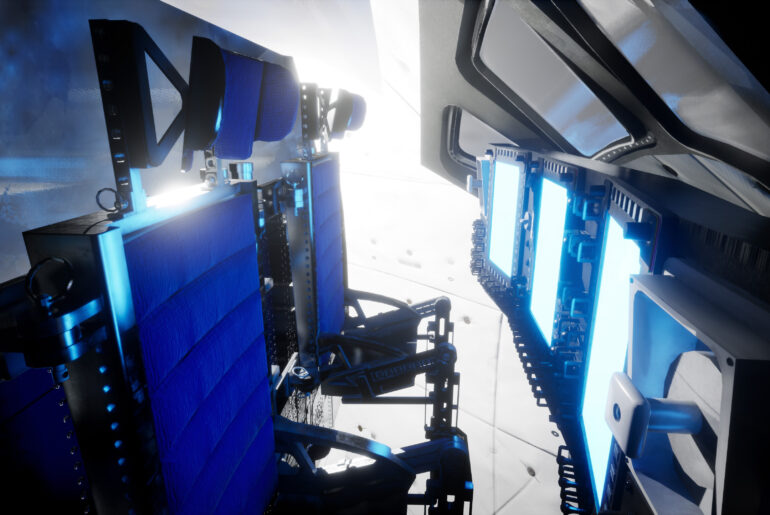

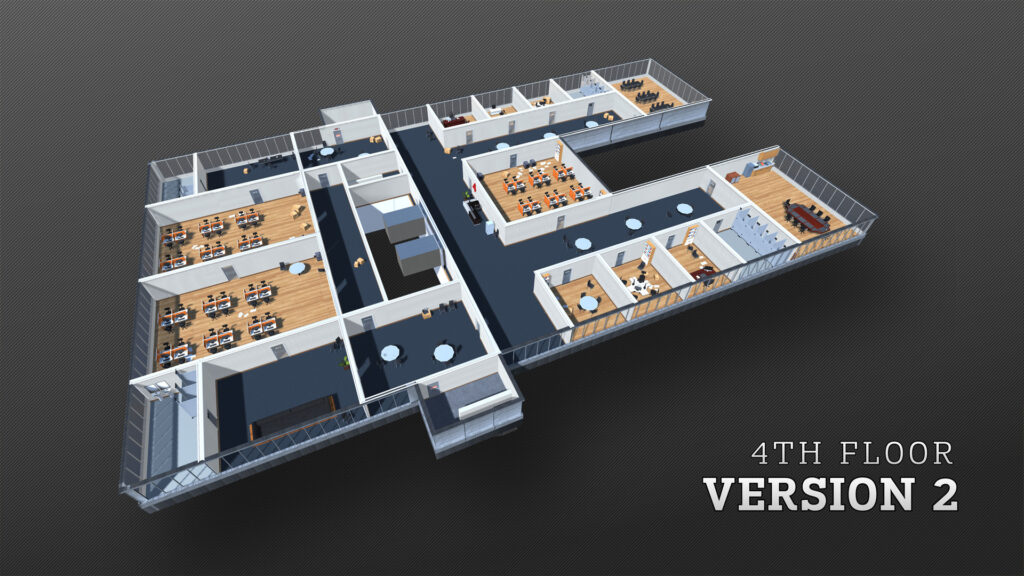

I modelled and textured four distinct floor layouts, consistent with a typical office building.

The 3D content was then imported into Unity and brought to life, with various fire scenarios being simulated

Extensive user testing revealed that the use of multisensory interfaces did improve the validity of user behavior, such as by reducing their game-like attitudes. The project and its findings have attracted substantial interest from media outlets.